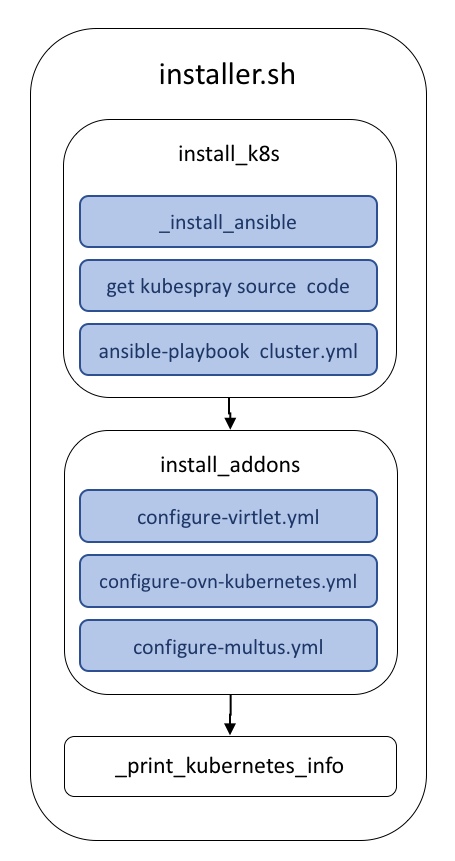

This project offers a means for deploying a Kubernetes cluster that satisfies the requirements of ONAP multicloud/k8s plugin.

Its ansible playbooks allow provisioning a deployment on Virtual Machines and on Baremetal.

KuD facilitates virtual deployment using Vagrant and Baremetal deployment using the All-in-one script.

Components

| Name | Description | Source | Status |

|---|---|---|---|

| Kubernetes | Base Kubernetes deployment | kubespray | Done |

| ovn4nfv | Integrates Opensource Virtual Networking | configure-ovn4nfv.yml | Tested |

| Virtlet | Allows to run VMs | configure-virtlet.yml | Tested |

| Multus | Provides Multiple Network support in a pod | configure-multus.yml | Tested |

| NFD | Node feature discovery | configure-nfd.yml | Tested |

| Istio | Service Mesh platform | configure-istio.yml | Tested |

Deployment

To deploy KuD independent of ICN please refer to the documents/instructions here.

Listed the items or code blocks in play for clarity

To have the KuD offline mode working fine we have to do the following:

- Get all the dependency packages and resolve the dependency in the right order

- Install basic components for KuD

- Run the installer script

- Docker

- Ansible

- Get the kubespray prescribed version listed in KuD

- Get the correct version of Kubeadm, etcd, hypercube, cni

- Get the docker images used by kubespray

- load them making sure the right versions are available.

- We had the override the following defaults in Kubespray:

- Download_run_once: true

- Dowload_localhost: true

- Skip_downloads: true

- Strict_dns_check: False

- Update_cache: False

- Helm_client_refersh: False

- Get galaxy-requirements

- Get galaxy requirements dependents

- Run the roles

- Get add-ons

- Modify the ansible script to not pull from the web and instead use from release dir and run the playbook. (Tested for Multus)

- Run all the addon playbooks

- KuD offline done. Run the test cases to verify

Challenges

The values listed above are the changes that could be overridden in K8s-cluster.yml. However, some of the changes did not have his option. There are cases where we had to manually change some defaults in Kubespray code.

One of the places is when it checks for docker_version in ubuntu_bionic.yml. The existing version is 18.06.0 expected is 18.06.1. Tried to change this by supplying the correct/requested version by Kubespray. Regardless it fails unless we change the hardcoded version in the https://github.com/kubernetes-sigs/kubespray/blob/release-2.8/roles/container-engine/docker/vars/ubuntu-bionic.yml#L6