- Motivation

Architecture blocks

- Setup information

- Online setup

- Offline setup ( WIP )

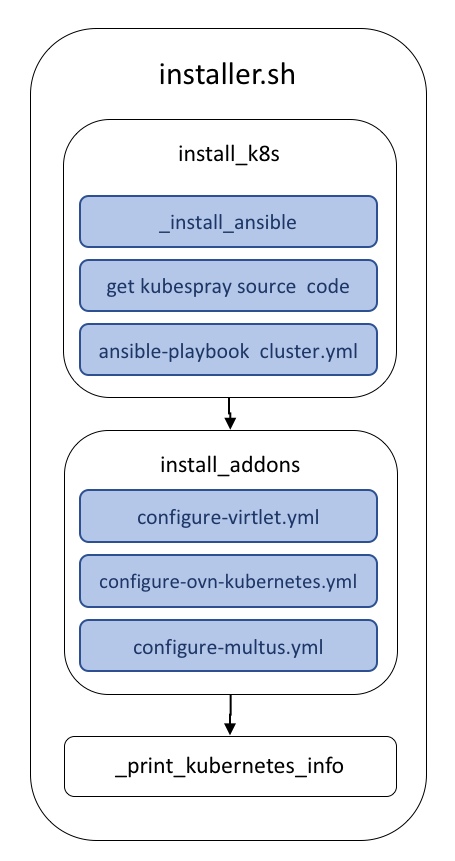

- Proposed Workflow diagram

Offline setup flow - tested

- Challenges

Motivation

This project offers a means for deploying a Kubernetes cluster that satisfies the requirements of ONAP multicloud/k8s plugin.

Its ansible playbooks allow provisioning a deployment on Virtual Machines and on Baremetal.

KuD facilitates virtual deployment using Vagrant and Baremetal deployment using the All-in-one script.

| Name | Description | Source | Status |

|---|---|---|---|

| Kubernetes | Base Kubernetes deployment | kubespray | Done |

| ovn4nfv | Integrates Opensource Virtual Networking | configure-ovn4nfv.yml | Tested |

| Virtlet | Allows to run VMs | configure-virtlet.yml | Tested |

| Multus | Provides Multiple Network support in a pod | configure-multus.yml | Tested |

| NFD | Node feature discovery | configure-nfd.yml | Tested |

| Istio | Service Mesh platform | configure-istio.yml | Tested |

Architecture blocks

Setup information

Online setup

To deploy KuD independent of ICN please refer to the documents/instructions here.

Offline setup.

Offline setup flow - tested

- Get all the dependency packages and resolve the dependency in the right order

- Install basic components for KuD

- Run the installer script

- Docker

- Ansible

- Get the kubespray prescribed version listed in KuD

- Get the correct version of Kubeadm, etcd, hypercube, cni

- Get the docker images used by kubespray

- load them making sure the right versions are available.

- We had the override the following defaults in Kubespray:

- Download_run_once: true

- Dowload_localhost: true

- Skip_downloads: true

- Strict_dns_check: False

- Update_cache: False

- Helm_client_refersh: False

- Get galaxy-requirements

- Get galaxy requirements dependents

- Run the roles

- Get add-ons

- Modify the ansible script to not pull from the web and instead use from release dir and run the playbook. (Tested for Multus)

- Run all the addon playbooks

- KuD offline done. Run the test cases to verify

Challenges

The values listed above are the changes that could be overridden in K8s-cluster.yml. However, some of the changes did not have his option. There are cases where we had to manually change some defaults in Kubespray code.

One of the places is when it checks for docker_version in ubuntu_bionic.yml. The existing version is 18.06.0 expected is 18.06.1. Tried to change this by supplying the correct/requested version by Kubespray. Regardless it fails unless we change the hardcoded version in the https://github.com/kubernetes-sigs/kubespray/blob/release-2.8/roles/container-engine/docker/vars/ubuntu-bionic.yml#L6

Another case where we encountered issues is when ansible runs a name: ensure docker packages are installed

update_cache: "{{ omit if ansible_distribution == 'Fedora' else False }}"

Right now we have the K8s cluster setup in offline mode on the client-server machine replicated in the lab. However, we see that support for the offline version is poor for v2.8.2 currently used by KUD. Setting up the galaxy requirement has been a blocker. We have developed an approach to it to get the add-ons going.

The current system in KuD has a lot of Ansible code that will be rewritten

Proposed workflow

- Have KuD live to run for August 15 release.

- September 15 Priorities

- Update the version of Kubespray from 2.8.2 to 2.11 to leverage the features of Kubespray caching available in Master.

- Test it on KuD-live version

- Have the new daemon sets integrated with the online version since, some of them require a higher version of Kubeadmn and kubectl version which is automatically updated once, kubespray is updated.

- Have the existing addons like virtlet, nfd, cmk, rook etc be converted into daemon sets and tested in live KuD for the addons converted into Daemonsets. This depends we should also have non-daemon sets to test out our infra.

- Provide OVN installation package information and OVN daemonset.yaml - Ritu?

- Have docker registry to have come container images to be pulled from the provisioned servers.

- October 15 Priorities

- Host on Http-server put packages for addons so that the add-on scripts are not manipulated (If any)

- Have all the docker images used by Kubespray to pull from here from deployment.

- Also, have ansible roles created which will help to maintain a single version of KuD for both online and offline deployment.